by Rao Chejarla

Enterprises are caught between slow, rigid automation and fast but unpredictable AI agents. This document introduces Governed Dynamism, a practical approach that allows AI explore new problems safely, captures what works, and converts it into reliable, auditable workflows that scale with confidence.

Every enterprise I talk to is stuck in the same trap. On one side: Legacy automation. BPMN workflows. Scripts. Integration platforms. Safe, auditable, reliable—and impossibly slow to build. Your team spends months mapping a single process. The backlog grows. Only the top 1% of use cases ever get automated.

On the other side: Agentic AI. The promise is intoxicating. “Just let the AI figure it out.” No more mapping. No more coding. The agent reasons, acts, and solves problems on the fly.

But here’s what the demos don’t show you: Agents drift.

An agent might solve a problem one way today and a completely different way tomorrow. It might skip steps. Hallucinate tools that don’t exist. Route a refund to the CEO’s inbox because it seemed helpful. And when your auditor asks “why did the system do that?”—good luck explaining that the AI “felt like it.”

The paradox of enterprise automation: Safe is slow. Fast is dangerous.

I’ve been developing an architectural pattern that resolves this paradox. I call it Governed Dynamism—and it might change how you think about AI in the enterprise.

The Cowpath Principle: Let Users Show You the Way

In urban planning, there’s a concept called “desire paths.”

Instead of deciding where people should walk and pouring concrete, smart planners do something counterintuitive: they plant grass and wait.

Over time, people trample natural paths through the grass—the routes they actually want to take. These are called “cowpaths.” Once the paths are visible, the planners pave them.

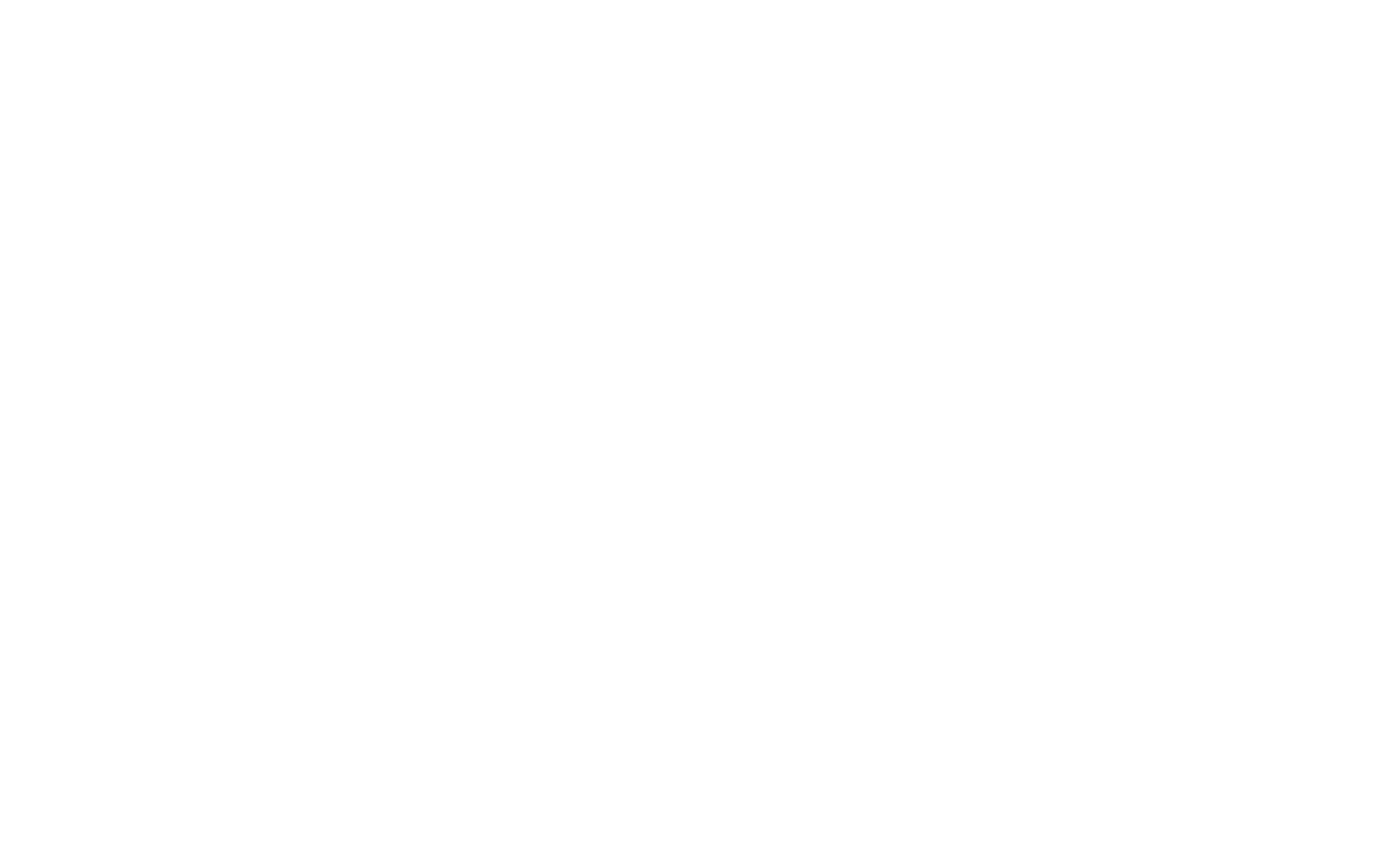

Governed Dynamism applies this principle to software automation:

This insight is simple but profound.

Use AI as a temporary pioneer, not a permanent worker

You pay the “AI tax”—the latency, cost, and risk of non-deterministic reasoning—during a discovery phase. The AI might try different approaches. Some will fail. Some will succeed but take suboptimal paths. Over multiple executions, patterns emerge: which tool sequences work, which ones perform best, which ones handle edge cases gracefully.

Once you have enough successful traces to identify a reliable pattern, you pave it. Then every future execution is instant, cheap, and fully auditable.

What This Looks Like at Scale

Day 1: The system is purely dynamic. AI agents solve novel problems with high cost and strict per-step governance. Every action is logged.

Day 30: The most common problems (“the head”) have been identified. Their traces are analyzed, parameterized, and promoted to approved workflows. Cost per transaction drops.

Day 100: The system is predominantly deterministic. 95%+ of traffic runs on paved highways—fast, cheap, and fully auditable. The AI planner sits ready to handle only the newest, rarest edge cases (“the tail”).

The Knowledge Graph isn’t a static database anymore. It’s a learning engine.

Every interaction makes the system smarter. Every resolved edge case becomes a candidate for the next paved workflow. The organization captures institutional knowledge automatically, in executable form.

The Three Guarantees

- Safety Every action—dynamic or deterministic—passes through a governance layer. Permissions, policies, and approvals are enforced consistently. The AI cannot bypass controls.

- Efficiency You pay the AI tax during discovery—multiple executions while the system learns what works. But once a pattern is paved, future executions cost 95% less and run 50x faster. The more you use the system, the cheaper it gets.

- Evolution The system learns from usage. Business rules emerge from observation. The backlog of “processes we should automate” shrinks automatically because automation writes itself.

The Shift in Market

The industry has been asking the wrong question.

Wrong question: “How do we make agents safe enough for production?”

This leads to guardrails, filters, sandboxes—all trying to contain something fundamentally unpredictable. You’re fighting the nature of agents.

Better question: “How do we use agents to build things that are production-ready by design?”

This reframes the AI agent from a permanent worker (unpredictable, expensive, hard to audit) to a temporary pioneer (explores the unknown, records what works, then gets out of the way).

It’s not “agents vs. workflows.” It’s agents becoming workflows.

The cowpath becomes the highway.

Getting Started: Three Steps This Week

If this resonates, here are three things you can do this week:

- Audit Your Agent’s Decisions

Pick one AI workflow in your org. Can you explain why it made each choice? If not, you have a drift problem.

- Identify Your Cowpaths

What are the 5 most common requests your agents handle? These are candidates for paving. Even manual conversion to deterministic workflows will cut cost and risk.

- Separate Intent from Implementation

Review your tool design. Are you exposing implementation choices (which vendor, which API, which queue) that should be policy-driven? Consolidate them.

Technical Deep Dive

_For architects and engineers who want the implementation details. _

Workflow Lifecycle States

Every workflow exists in one of six explicit states:

DRAFT → DYNAMIC → CANDIDATE → REVIEW → APPROVED → DEPRECATED

This provides full audit trails, enables rollback, and makes promotion trackable.

Multi-Trace Convergence

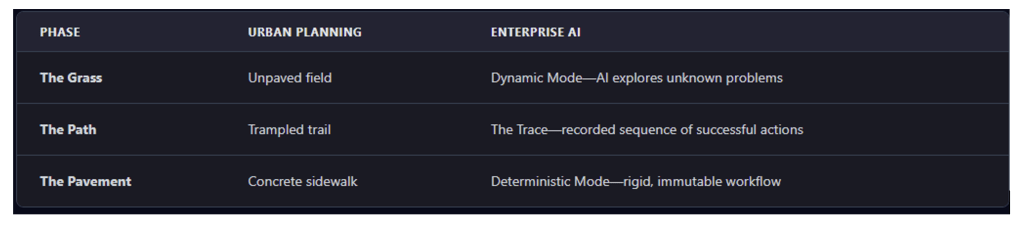

A single trace only captures one path. The system requires multiple traces before promotion:

Parameter Synthesis

Converting traces to workflows requires classifying each parameter:

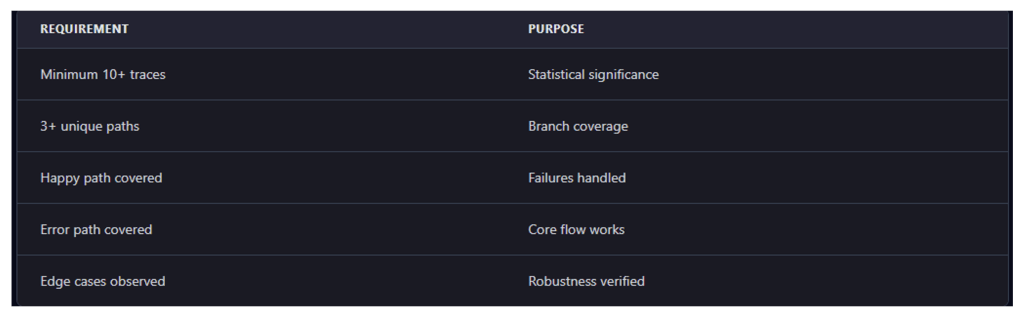

Intent Routing

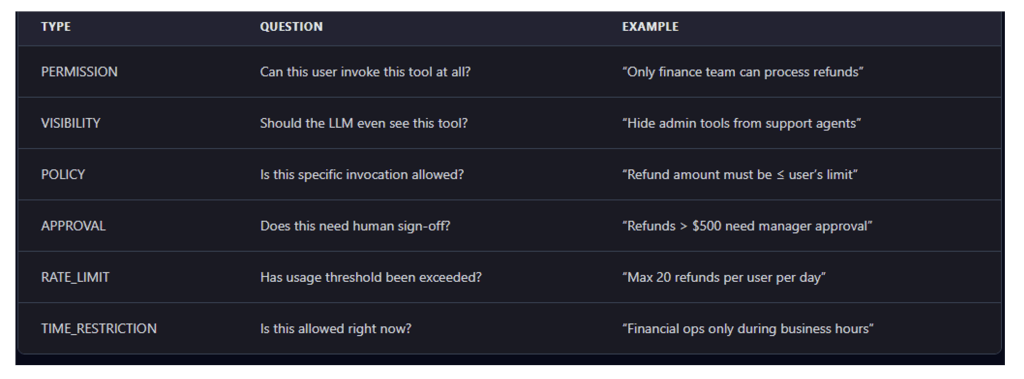

Governance Shield (Two-Stage)

Stage 1: Visibility (Before LLM sees tools) Filter tool bag by user role and permissions. LLM only sees tools it’s allowed to use.

Stage 2: Policy (Before execution) Validate parameters against policies: – Amount limits – Time restrictions

- Rate limits – Approval requirements

Policy Types

The Paving Formula

Discovered Traces

- Convergence Analysis

- Parameter Synthesis

- Human Review

= Deterministic Workflow

The system captures what worked, identifies patterns, generalizes parameters, and requires approval before promotion.

Conclusion

The future of enterprise AI isn’t autonomous agents running wild. It’s governed systems that learn, codify, and scale institutional knowledge.

It’s not about replacing humans. It’s about capturing human judgment once and executing it perfectly forever.

The cowpath becomes the highway.

Frequently Asked Questions

How is this different from regular workflow automation?

Traditional workflow automation requires humans to define every step upfront. Governed Dynamism lets AI discover the steps, then converts those discoveries into traditional workflows. You get the flexibility of agents during exploration and the reliability of deterministic systems in production.

What if the AI discovers a bad pattern?

Every promoted workflow goes through human review. The system shows the traces, the extracted logic, and the proposed definition. A human must approve before any cowpath becomes a highway.

How many traces do you need before paving?

It depends on complexity. Simple linear flows might need 10-20 traces. Flows with multiple branches might need 50+. The system tracks coverage metrics and won’t propose promotion until minimum thresholds are met.

Can I manually create workflows too?

Yes. Governed Dynamism doesn’t replace manual workflow authoring—it augments it. You can create workflows from scratch, or start with AI discovery and refine the result.

What about workflows that should stay dynamic?

Some tasks are inherently creative or exploratory. You can mark certain intents as “always dynamic” so they never get paved. The AI handles them every time, with full governance.

Have questions about implementing Governed Dynamism? Contact us or leave a comment below.